The popularity of using artificial intelligence to generate images is growing and recently an application called Lensa AI became popular on Tik Tok and other apps, it reached close to 1 million users in a very short time. However, the popularity of AI-generated images has raised major privacy and ethics concerns.

Originally, Lensa was launched as a photo editing tool by Prisma Labs in 2018 and went viral a few weeks ago after releasing its “Magic Avatars” feature. The app uses 10 photos of the user to create a generated portrait by the neural network Stable Diffusion. Probably all of us see many Lensa AI portraits which look abstract, photorealistic, or anime-style images on social media platforms.

Stable Diffusion was trained on a dataset of more than two billion images culled from websites all over the internet, including Pinterest, Flickr, and Getty Image.

After AI-generated images rise in social media, people have reignited debate over the ethics of making images with models that have been trained using other people’s artwork or photos.

Many in the digital art community have shown concerns about AI models mass-producing images for so cheap, primarily if those images imitate styles that actual artists have spent years perfecting.

First, there’s the broader issue of Magic Avatars being created with a dataset trained on the work of artists without their permission to use. Following that, it uses the images submitted to train its AI, and users grant the company the right to “use, reproduce, modify, adapt, and create derivative works” in perpetuity and royalty-free, as stated in its privacy policy.

The second issue is the AI’s apparent lack of nuance in treating the faces of people of color, as well as the oversexualized nature of many images uploaded by women. Lensa AI trains its server by using uploaded images. Lensa’s terms of service require users to submit only appropriate content containing “no nudes” and “no kids, adults only.”

According to, a research fellow at UCLA’s Center for Critical Internet Inquiry, Olivia Snow, “Each concern is valid, but less discussed are the app’s more sinister violations, namely the algorithmic tendency to sexualize subjects to an extent that is not only uncomfortable but potentially dangerous.” and she added, “many users—primarily women—have seen that even when they upload modest photos, the app not only generates nudes but also ascribes cartoonishly sexualized images, like sultry poses and gigantic breasts, to their images.”

Here is a thread by Prisma Labs about AI training:

Seeing plenty of thoughts online about the future of digital art in connection with AI generations, we decided to share some information on how AI generates images and why it will not replace digital artists. 🧵🧵🧵

— Prisma Labs (@PrismaAI) December 6, 2022

Also, Prisma Labs said that AI-generated images “can’t be described as exact replicas of any particular artwork.”

But on the other hand, according to the terms of the agreement, Lensa may use the photos, videos, and other user content for “operating or improving Lensa” without compensation.

“We’re learning that even if you’re using it for your own inspiration, you’re still training it with other people’s data,” said Jon Lam, a storyboard artist at Riot Games. “Anytime people use it more, this thing just keeps learning. Anytime anyone uses it, it just gets worse and worse for everybody.”

Again, in a Twitter thread, Lauryn Ipsum pointed out that, some of the Lensa AI-generated images have the signature of the original artist. So, that is another question. If the bot just training by images (not collecting or copying) how do some of them have the signature of an artist?

I’m cropping these for privacy reasons/because I’m not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist’s signature is still visible. That’s the remains of the signature of one of the multiple artists it stole from.

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022

A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1

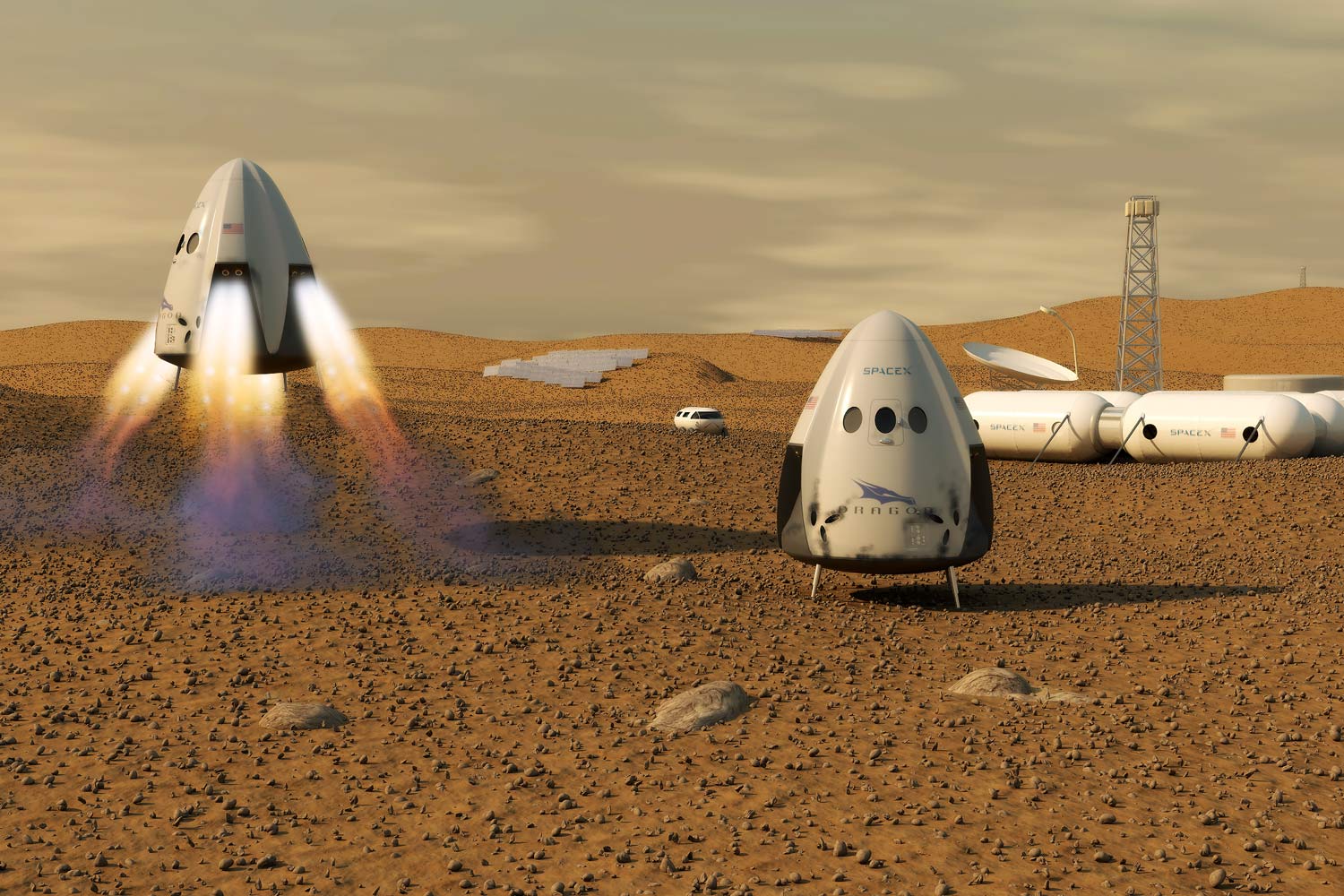

Also, a few months ago, Jason M. Allen, a game designer won first place in an art competition; his winning image, titled “Théâtre D’opéra Spatial” (French for, Space Opera Theater), was made with Midjourney. He posted this achievement on Discord and it went viral on Twitter. “This sucks for the exact same reason we don’t let robots participate in the Olympics,” one Twitter user wrote.

Probably in 2023, we will discuss more AI-generated ‘art’, and let’s see what will happen next.