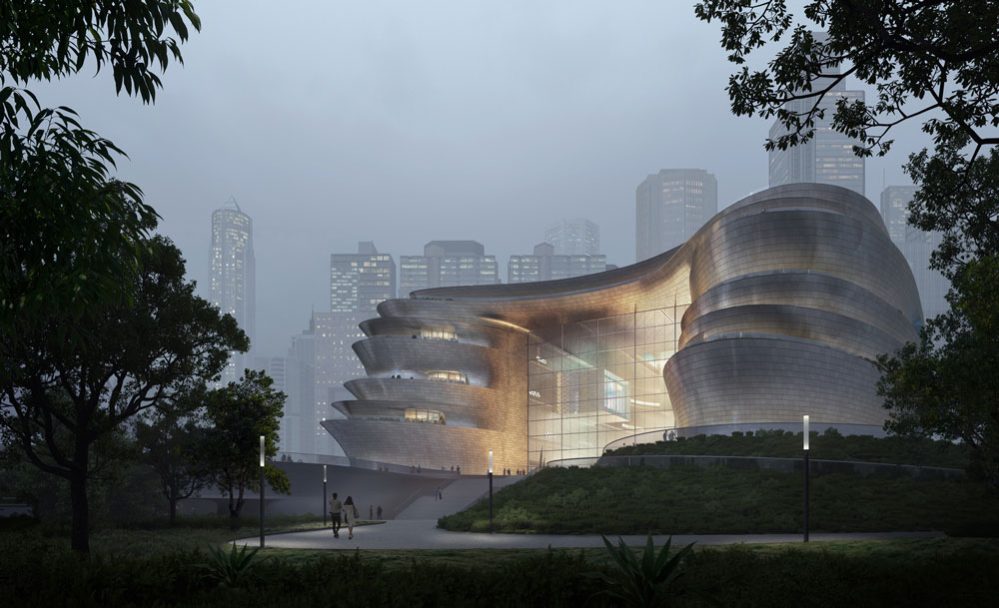

Google Research has introduced a new diffusion model for video generation called Lumiere, which uses a diffusion model called Space-Time-U-Net. Lumiere is a text-to-video diffusion model designed for synthesizing videos that depict realistic, diverse, and coherent motion, which is a significant challenge in video synthesis. Lumiere is currently in beta and is available for use.

“To this end, we introduce a Space-Time U-Net architecture that generates the entire temporal duration of the video at once through a single pass in the model. This is in contrast to existing video models which synthesize distant keyframes followed by temporal super-resolution — an approach that inherently makes global temporal consistency difficult to achieve.” stated researchers in the paper named “Lumiere: A Space-Time Diffusion Model for Video Generation.”

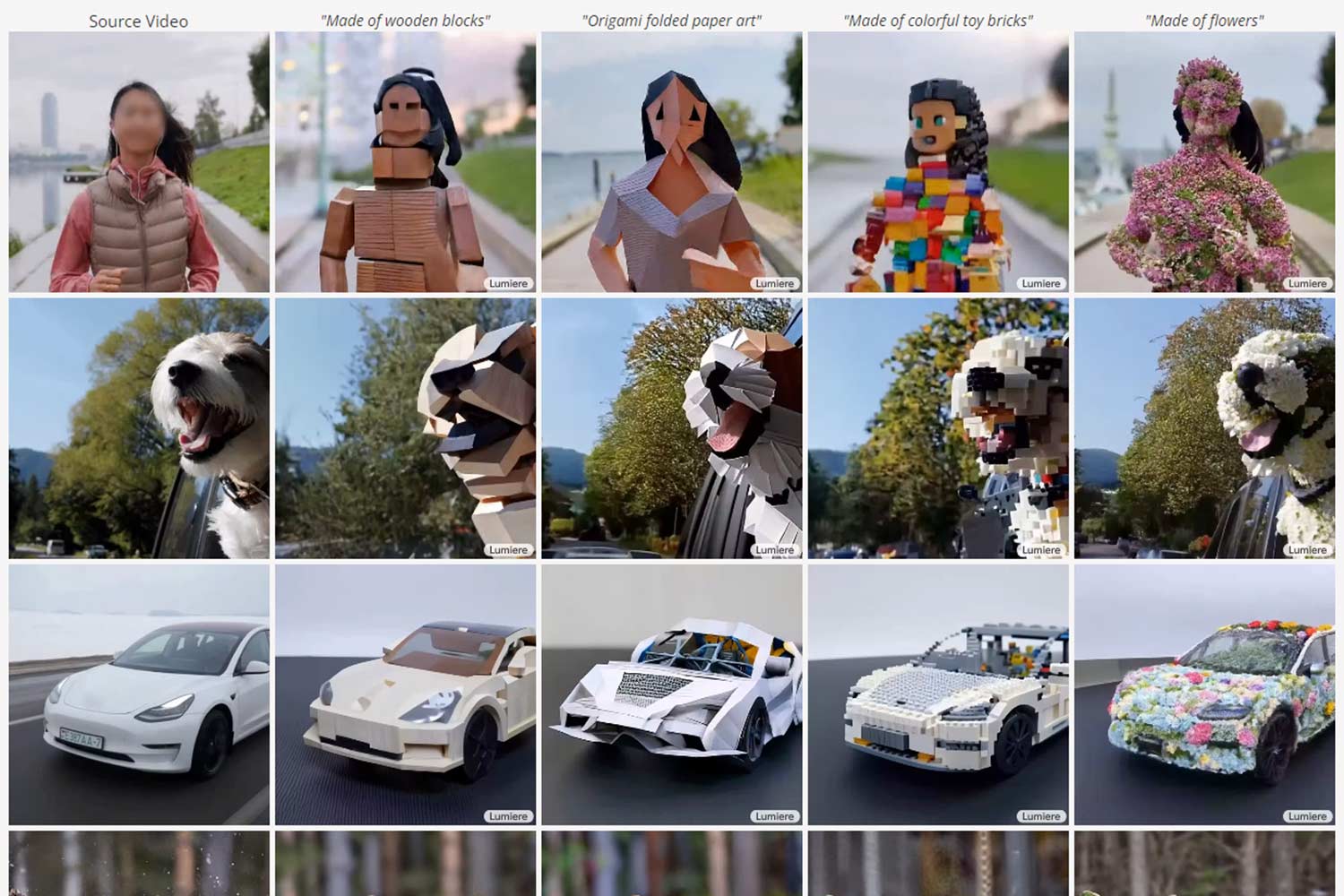

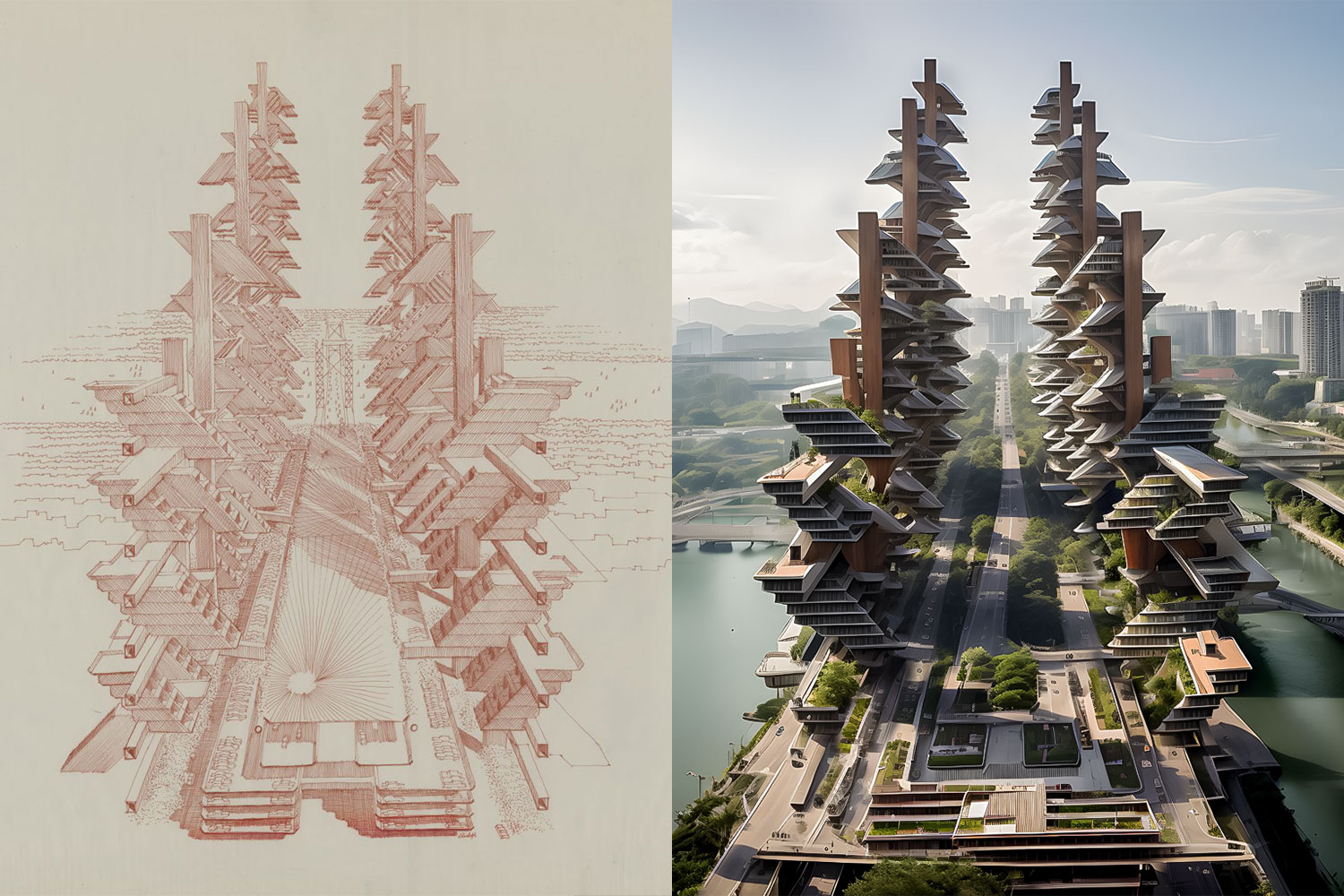

Mainly, The new model is a tool that can create videos in a desired style using a single reference image. It achieves this by using fine-tuned weights of text-to-image models.

Also, Lumiere offers several modes of operation, including converting text to video, animating still images, generating videos in a specific style based on provided samples, editing existing videos based on written clues, animating specific areas, or editing videos piece by piece.