Seoul-based Architectural designer Lynn Kim (LynnSquared) has expressed her thoughts and experience on AI-generated tools, especially Midjourney.

Lynn Kim has a vast array of experience in architecture, interiors, and design in the professional world, while her AI artwork focuses on realistic dreamscapes and architectural visions. Let’s dive into her creative world.

Before Midjourney

Before Midjourney, Lynn had always been fascinated by computational design. Her curiosity was explored through an algorithmic design course using Processing led by Roland Snooks at the University of Southern California, where she received her college degree in Bachelor of Architecture in 2011. The course was about developing multi-agent algorithmic processes that encode architectural design decisions within computational agents that interact to create an emergent architecture. During her career in architecture, Lynn incorporated parametric designs through Rhino 3D and grasshopper.

About LynnSquared’s artistic approach

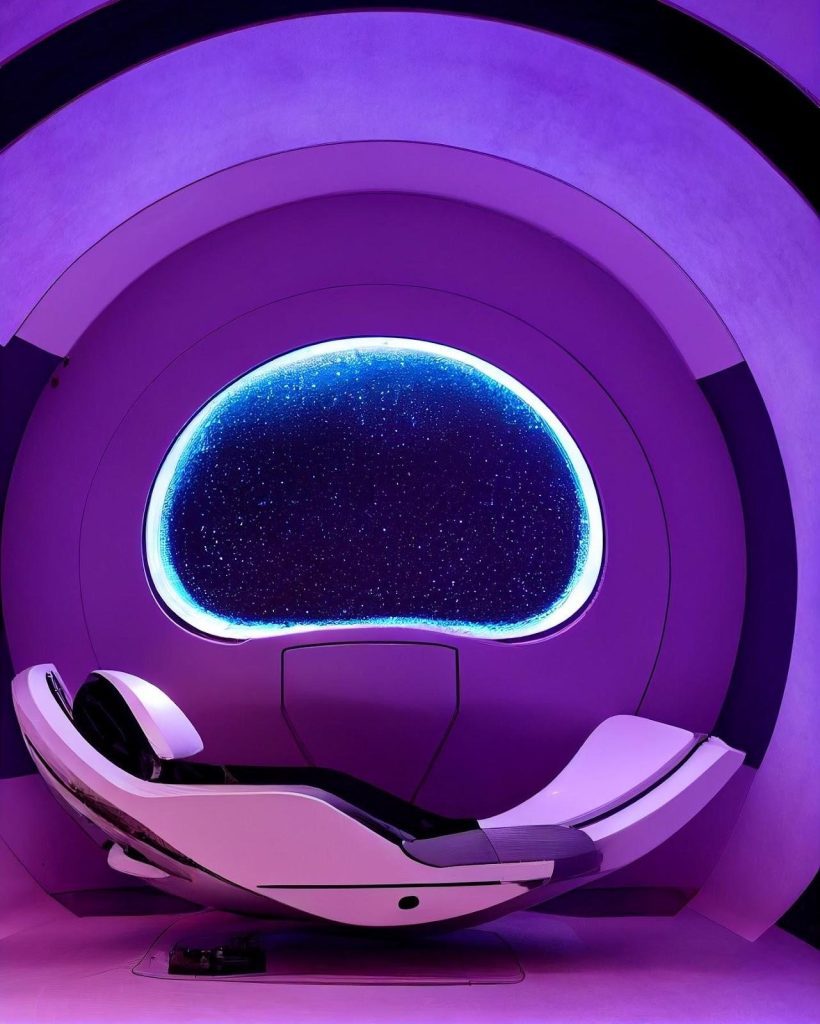

Lynn Kim’s ability to create compelling visions through AI makes her work unique and captivating and sets her apart from other ai artists. While her visions and concepts may still be futuristic and imaginative, Lynn creates AI-generated artworks and virtual environments that are more visually immersive. Her AI-artworks allow the viewers to visually experience beautiful places and cultures that they would never be able to visit in person or real life.

Her latest work investigates the use of AI Image generation technology, such as Midjourney, to share her imaginative vision for the future in visual immersion. In addition, she is currently researching and exploring ways to bring her 2-Dimensional AI-generated visions into 3-Dimensional virtual space where people can visit and have fully immersive experiences in her dreamscapes in the metaverse.

Using Midjourney and AI-generated text-to-image models

Initially, text-to-image generative models were started with the evolvement of generative models such as Generative Adversarial Networks (GANs). AI text-to-image generative models such as DALLE-2, Stable Diffusion, and Midjourney have captured public attention since the summer of 2022 when the models were opened to the public. Like DALLE-2 and Stable Diffusion, Midjouney uses AI and machine learning to generate images based on text prompts. While she has tested different models, Midjourney’s visual style aligns with her artistic approach. So she currently focuses on Midjourney as her primary tool for her artwork.

Her prompt-crafting process generally considers these components: space type, architecture style, spatial elements and forms, time of the day, location, scene details, and render style. The order of the components and keywords within the prompt also dictates the strength of the influence, so she often explores different keywords and their placement within the prompt. She usually starts with a vision and mental image.

How Lynn collaborates with AI to create her immersive visions?

Trained as an architectural designer, Lynn Kim gained an eye for compelling architectural design through experience. According to Lynn, as an AI artist specializing in architectural visions, it is essential to understand what makes good architecture and space. Many design elements can play a big part in producing compelling architectural visuals–overall composition, building massing and proportion, materiality, camera angles, lighting, and surroundings. Understanding these fundamental architectural elements and visual techniques can help craft the prompt.

According to Lynn, When using Midjourney, the outputs can often be chaotic or out-of-proportion, and the image generated may feel “nonsensical.” Although this style can be a chaotically beautiful representation of surrealism, when the elements are off-proportion or chaotic, it will lose its believability of the feasibility of the vision.

As an architectural designer, Lynn Kim is more drawn to believable and photorealistic dreamscapes, visions that convince the viewers that it is possible in the future when technology advances. However, if the visions are too grounded in reality, they may also be boring and not add value to inspiring new imaginations. Therefore, navigating the boundary between imagination and reality is critical in creating a visual immersion.

Lynn is a big fan of Christopher Nolan’s movies such as ‘Inception,’ ‘Interstellar’ and James Cameron’s ‘Avatar.’ Although these films are mind-bending and convey out-of-this-world visions, their visual immersion makes them very compelling. Their visual techniques of realism and attention to detail allow the audience to feel as though the cinematic world is ‘real,’ and they can step right into it.

Lynn aims to achieve something similar to what these filmmakers would do by creating sequences with AI work. In the film, a sequence is a series of scenes that form a distinct narrative unit, usually connected by a unity of location or time. Many of her AI works are generated in series, where various scenes from different camera angles and depths of field are developed with AI to convey a strong narrative of the vision. She pays close attention to generating coherent visual language in every scene through prompt crafting and exploring many variations in Midjourney.

AI x NIKE

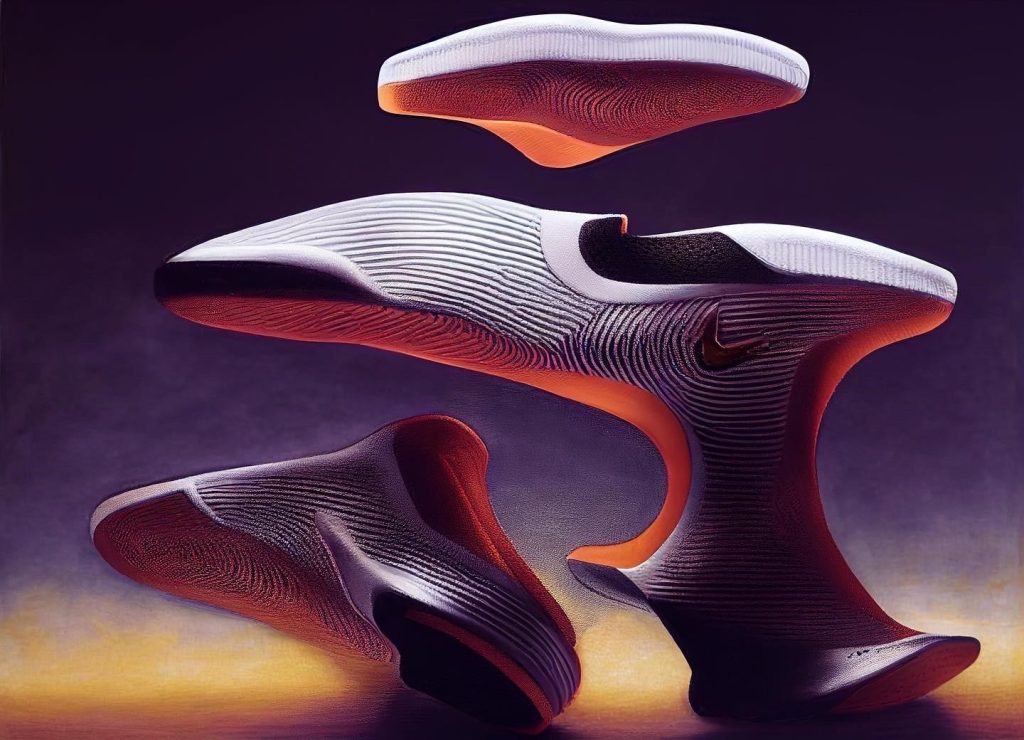

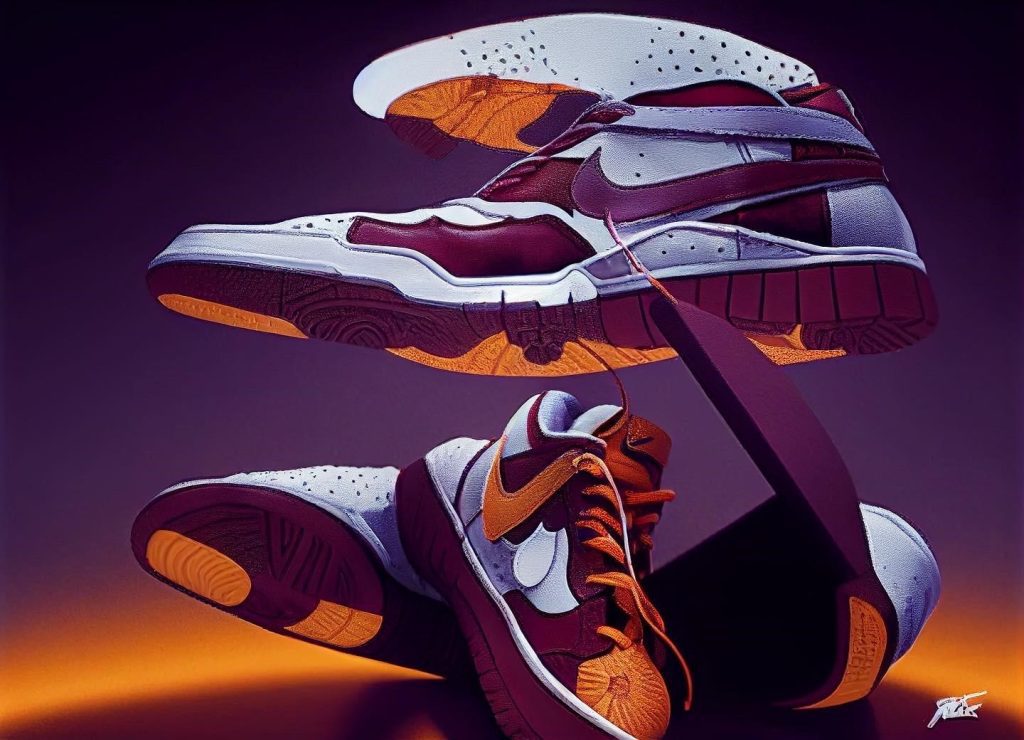

Recently, Midjourney released the new “remix” feature, which allows users to control and edit the outcome of the image. Before this feature roll-out, there was no way to edit the outputs, and the users had to go through an infinite amount of variations even to get a chance to achieve the desired result. She tried testing the limits of this “remix” feature with this AI X NIKE shoe concept. This series was not an actual collaboration with the NIKE brand but rather her exploration of how AI tools can be helpful for commercial brands.

Firstly, Lynn Kim started with sculptural forms, which she would later use as a base to build a concept. According to Lynn, once she had an image output with the forms, composition, and colors that she liked, she used the remix feature and added the text prompt with a twist of “futuristic Nike Concept shoe.” The AI then had rough parameters with previously defined forms and composition and tried to make sense of the remixed prompt.

The results were impressive – you can see that the variations try to comply with the previously defined parameters while still being playful and exploring different shoe designs.

This process showed the potential of AI as a controllable design tool that can be integrated into the design process for designers and architects and even for commercial brands.

Getaway Series

Often the reference from “The Matrix,” the concept of simulated reality, is another notion Lynn aims to explore in her work. In an interview with Joe Rogan’s podcast, Elon Musk said, “If you assume any rate of improvement at all, games will eventually be indistinguishable from reality… We’re most likely in a simulation.” The simulation hypothesis proposes that all of our existence is a simulated reality, such as a computer simulation. Whether this is true or not, She is fascinated by this theory as it allows us to expand our imaginations and limitations beyond physical reality.

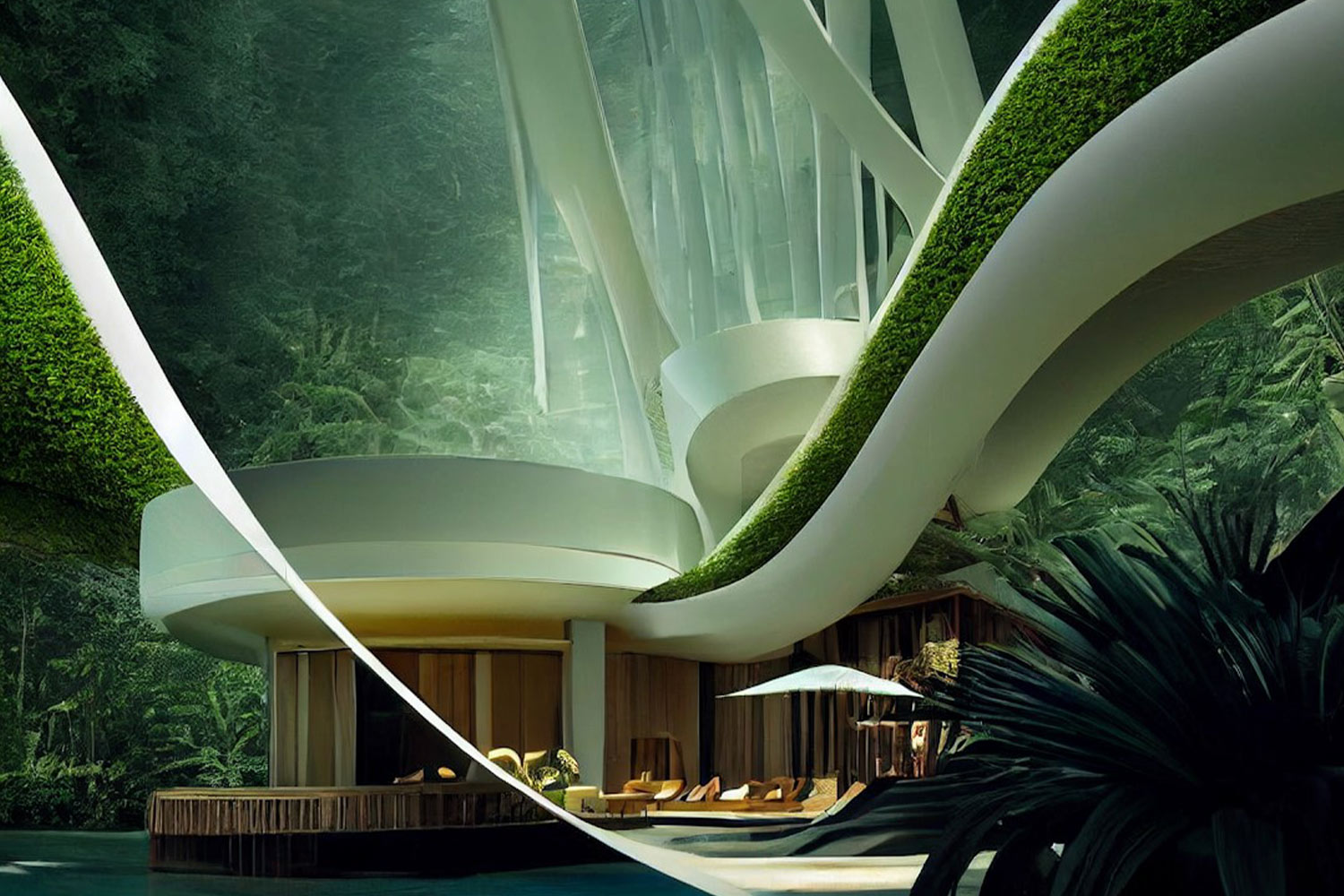

Getaway Series is an AI-generated vision with a simulation hypothesis as the underlying message. AI-generated through Midjourney, this series aims to allow viewers to re-think physical reality and imagine what it would be like to live in a fantastical simulated reality.

First, Lynn generated a series of AI-generated images of what could be a real-life luxury resort in the middle of the jungle in Bali, Indonesia. Then, she invests her time in finessing the details of compositions and details in Midjourney so that the visual representation is close to photorealistic.

According to Lynn Kim, with strategic sequencing, the series evolves into testing the limits of the physical boundaries of constructability.

The structures are cantilevering and hovering over waterfalls; one can only imagine what kind of spectacular experience it would be to be there in person.

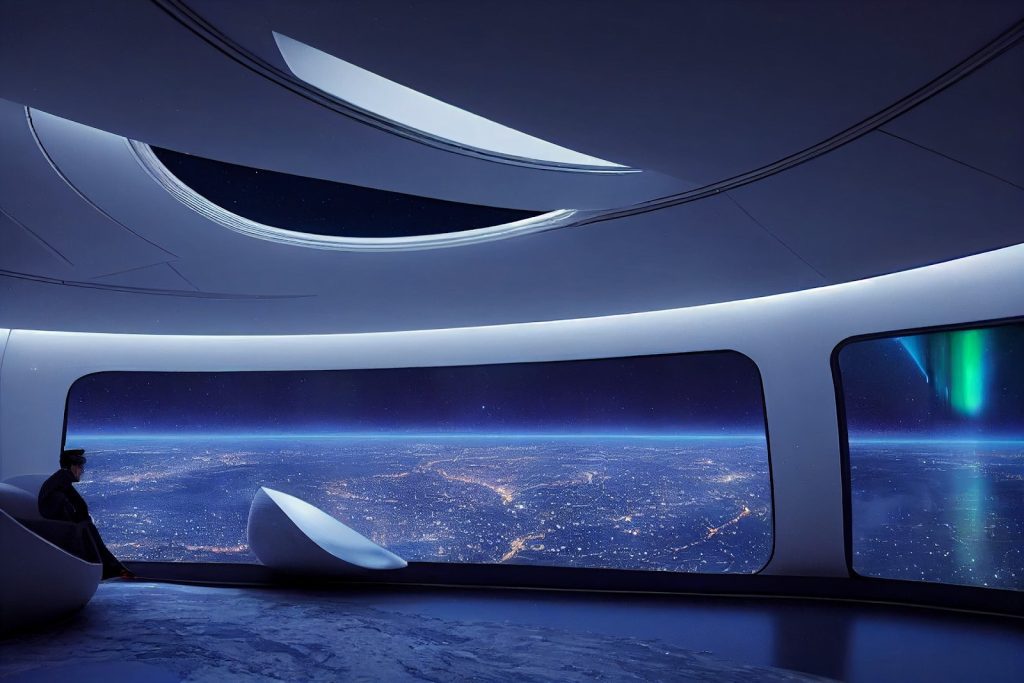

Overview Effect

Lynn recently read about William Shatner’s experience traveling to space and how it was overwhelming with sadness. Shatner describes this psychological effect that astronauts often feel as the “Overview Effect.” The varsity article further explains, “essentially, when someone travels to space and views Earth from orbit, a sense of the planet’s fragility takes hold in an ineffable, instinctive manner. It can change the way we look at the planet but also other things like countries, ethnicities, and religions; it can prompt an instant reevaluation of our shared harmony and a shift in focus to all the wonderful things we have in common instead of what makes us different.”

Inspired by William Shatner’s experience, Lynn wanted to give visual representation to the viewers to stir similar emotions she experienced. Space travel is something that the general public cannot experience. So, through the help of AI-I asked the AI to generate a visual representation of Willam Shatner’s space travel experience.

For Lynn, what is impressive about AI-generated images is that we can visually explore and expand our imaginations through AI, even the experiences and perhaps the feelings we have never experienced before.

Lynn’s thoughts on AI and its role in Architecture and the Metaverse

Artificial intelligence will likely play an essential role in architecture and the metaverse. In Lynn’s opinion, AI will become crucial for architects in creating more innovative designs in the physical world. It will allow architects greater efficiency, expand creativity and imagination in the design process, and aid in advancing our traditional construction technology and methodology. In the metaverse, AI will be used to create more immersive and realistic virtual environments, making it possible for people to experience places and cultures that they would never be able to visit in person or real life.

Find more AI-generated artworks by LynnSquared.

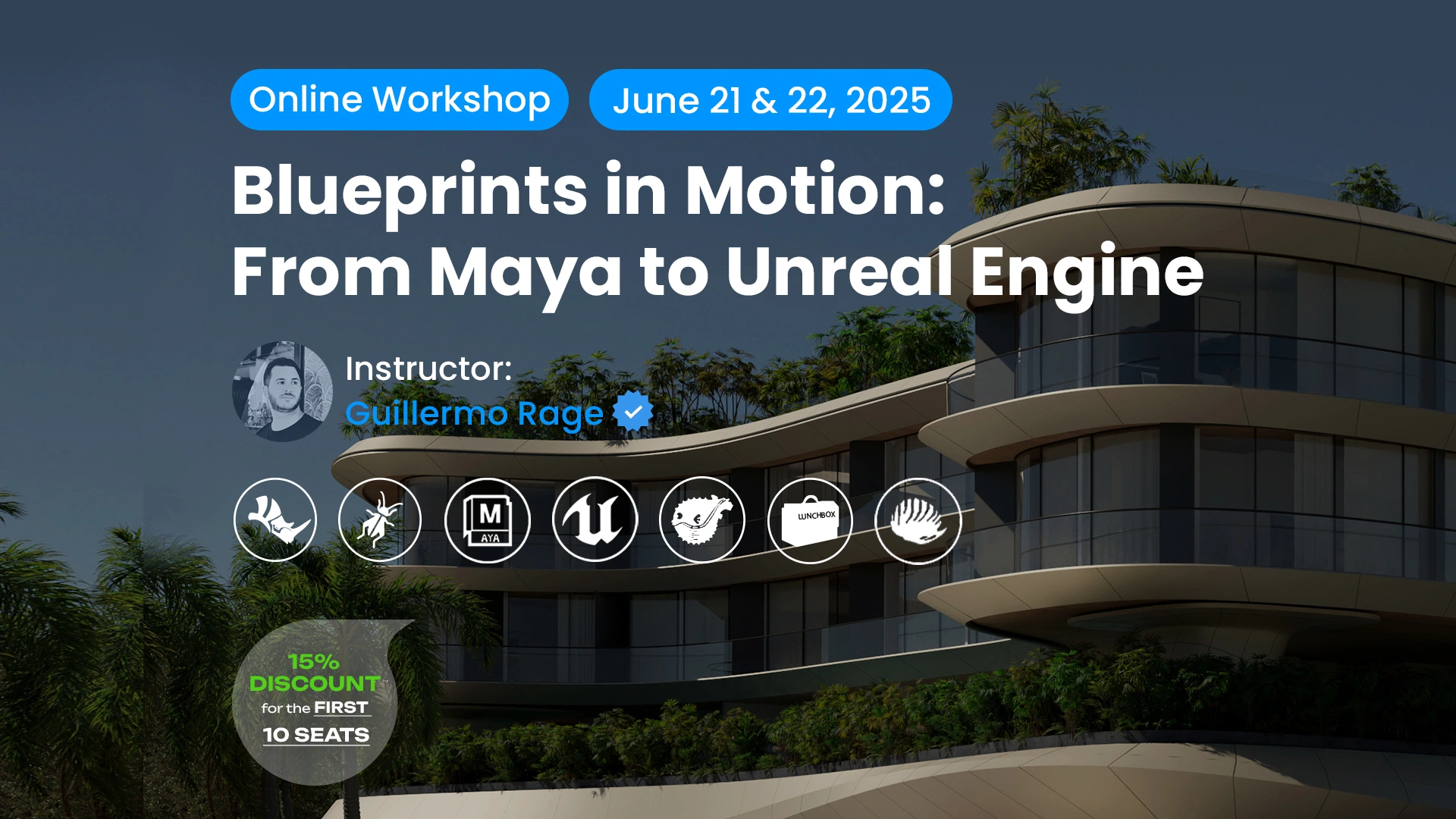

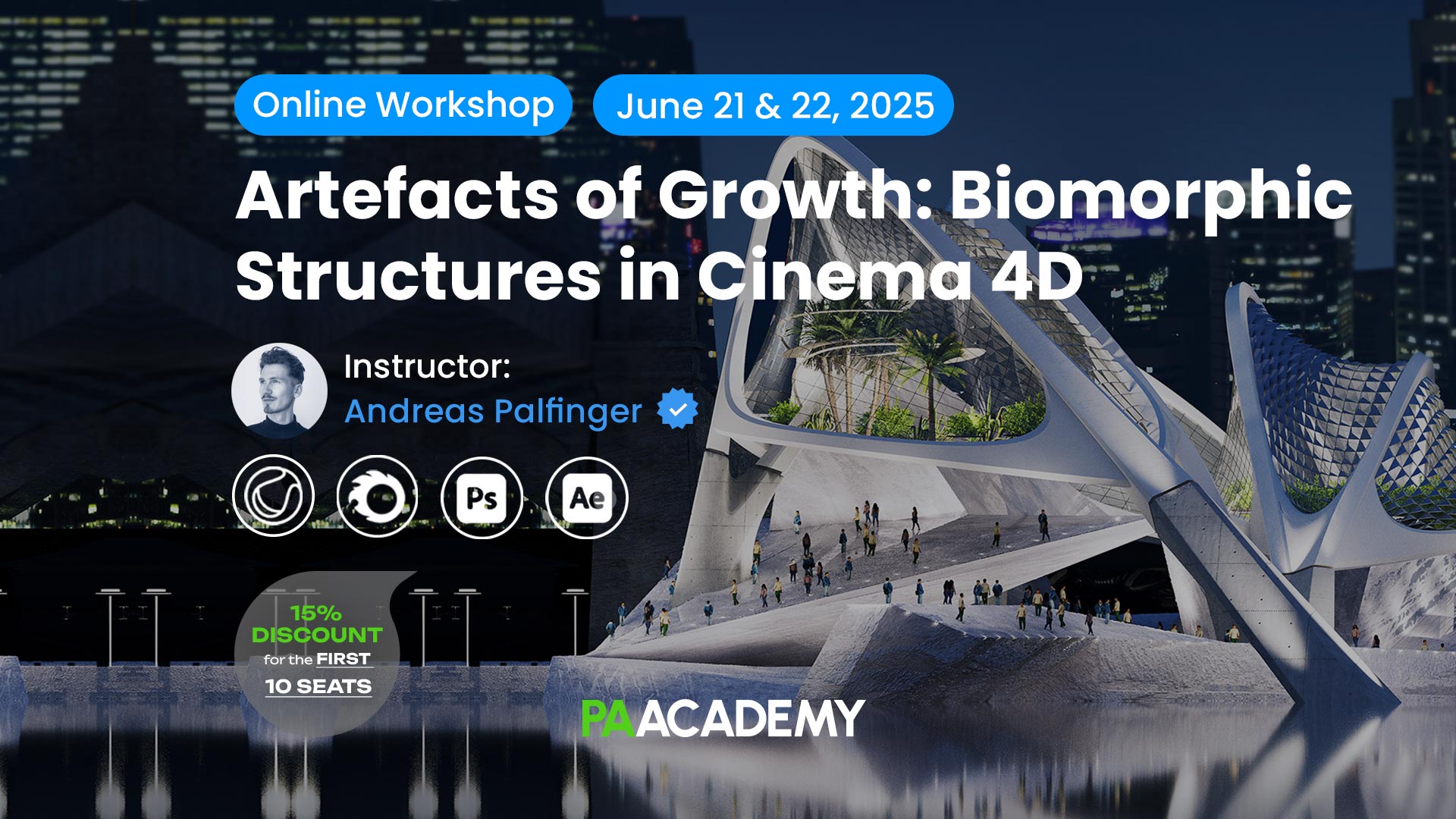

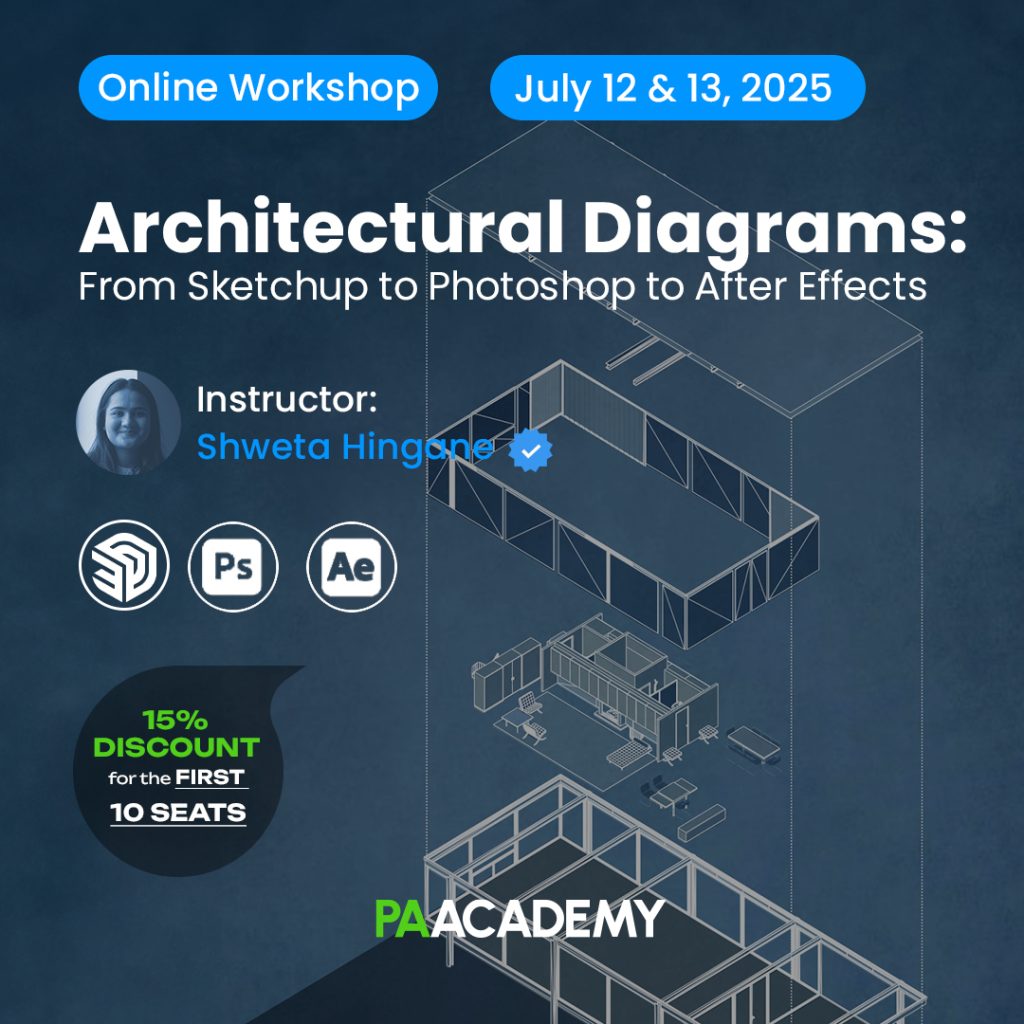

Parenthetically, If you are curious to dive deeper into Midjourney and AI-generated tools, you can check out the “Midjourney Architecture 2.0 / Studio Tim Fu” workshop by PAACADEMY.

Really enjoyed the article Lynne!

Avatar is my all time favorite movie, and I love VR too, via Meta Quest 2 headset.

It will be incredible if we could experience our Midjourney creations via VR headset.