Snap Inc. is doubling down on its augmented reality ambitions with the launch of its fifth-generation Spectacles, an AR headset that actively responds to the world around the user. Built for developers but rich with consumer potential, these glasses push AR beyond novelty, transforming it into an immersive, intelligent assistant for how people see and interact with their environment.

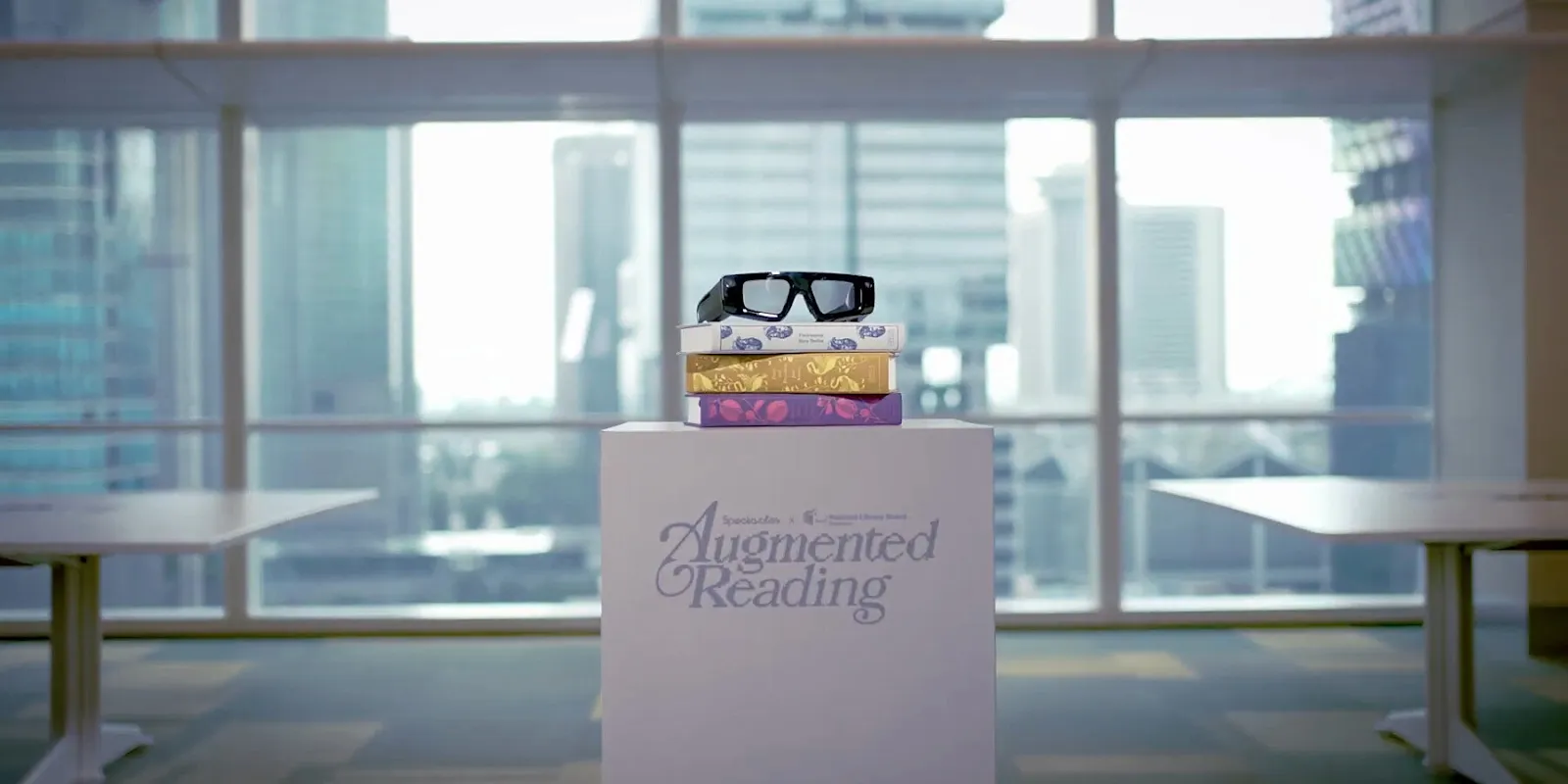

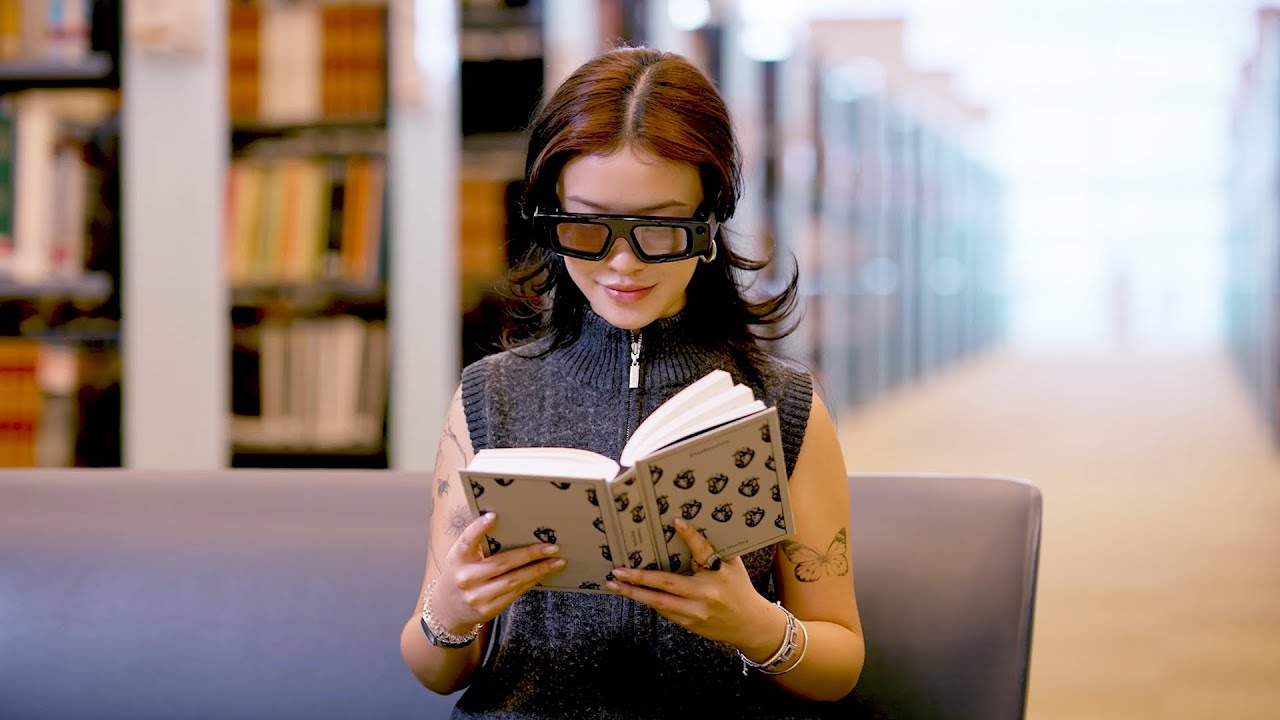

One of the standout features, Augmented Reading Lenses, introduces something entirely new: as a user reads a physical book, the glasses scan the text, interpret the context using OCR and AI, and respond with synchronized visuals and soundscapes. It marks a technological first for Snap and offers a glimpse into what reading could look like in the age of spatial computing.

What These Glasses Can Do

Technically, the most recent Spectacles provide a 46-degree diagonal field of view. This enables consumers to see graphics similar to those seen on a 100-inch display a few feet in front of them, without the need for a standard headset or portable screen. Snap uses Liquid Crystal on Silicon (LCoS) micro-displays and waveguide technologies for displaying digital content directly into the user’s field of vision. The 37-pixel-per-degree resolution produces a crisp image suited for most augmented reality applications.

The lenses have electrochromic tinting, which varies brightness depending on the ambient illumination. This guarantees that AR material is visible both indoors and outdoors in bright light.

The frames pack four cameras that work together with Snap’s Spatial Engine to track gestures, hand movements, and the world around the user in real time. This real-world awareness helps the glasses place digital elements exactly where they belong, anchored to the physical space, not floating randomly.

Audio is also central to the experience. With built-in stereo speakers, the glasses support spatialized sound, enhancing the realism of features like the Augmented Reading Lenses. This allows users to hear immersive cues, such as a door creaking or water splashing, triggered directly by the text being read.

The Augmented Reading Experience

The Augmented Reading Lenses result from a collaboration between Snap, the National Library Board of Singapore, and creative agency LePub Singapore. When a user reads a book like Frankenstein or more, the glasses do more than project static imagery; they bring the story to life through sound and motion.

Using optical character recognition (OCR), the glasses identify what the user is reading in real time. Generative AI then interprets the narrative and produces matching 3D animations. For example, reading about a thunderstorm may result in lightning visuals and atmospheric sound effects. These elements appear in sync with the reader’s pace, creating a responsive and personalized storytelling experience.

Notably, the system is responsive enough to keep up with normal reading speed. When the user looks up from the page, related imagery appears in their field of vision, maintaining immersion without distraction. It’s a form of ambient storytelling that enhances traditional reading with reactive visual and auditory layers.

Voice, AI, and Interaction

Snap OS, the glasses’ unique operating system, controls all interactions and system activities. It enables users to navigate apps or interfaces using natural hand movements like pinching or swiping, as well as voice instructions.

Integrated within Snap OS is “My AI,” Snap’s conversational assistant. Users can summon it to generate on-the-fly animations, answer contextual questions, or overlay helpful visuals. In one demonstration, a user asked, “Show me what this battle looked like,” and received a stylized, AI-generated re-creation in their field of vision. It’s not quite a hologram, but it’s the closest iteration yet in mainstream AR hardware.

Developer Focused, For Now

At this stage, Snap is limiting access to developers through a subscription model priced at $99 per month. This fee includes the hardware and access to Snap’s Lens Studio development environment. The goal is to seed a robust creative ecosystem before moving toward consumer release.

By focusing on developers first, Snap aims to spur innovation and discover the most compelling use cases for AR in fields like education, media, design, and entertainment. The Augmented Reading Lenses are just one early example of the types of applications the company expects to see grow from this strategy.

Real Limitations, Real Potential

Despite their innovation, the current-generation Spectacles are not without limitations. Battery life is capped at under an hour, restricting use to shorter, focused sessions. The frames are also bulkier than typical eyewear, a common tradeoff at this early stage of AR hardware.

Still, Snap is taking a methodical approach. A beta test for the Augmented Reading Lenses is planned in Singapore for late 2025, allowing the company to gather feedback and refine both the hardware and software experience ahead of broader deployment.

Snap’s fifth-generation Spectacles represent a meaningful step toward bringing augmented reality into everyday life. Snap is layering graphics over reality and also rethinking how AR can respond, resonate, and feel lived-in. With tight engineering, smart AI, and a developer-first mindset, the company is turning augmented reality into something that reacts and matters.

Specs Snapshot

- Weight: 226g — slightly heavier than sunglasses but manageable for AR use.

- Field of View: 46° diagonal, 37 pixels per degree resolution.

- Cameras: 4 total, with environmental and hand-tracking capability.

- Audio: Built-in stereo speakers with spatial sound support.

- Battery Life: About 45 minutes of continuous use; recharges via USB-C.

- Sensors: Includes GPS, GNSS, accelerometers, and gyroscopes for spatial awareness.

Leave a comment