Meta is redefining what’s possible in robotics and AI with the release of V-JEPA 2, its latest open-source “world model” designed to help robots understand and operate in environments they’ve never encountered before. This next-generation AI architecture signals a major shift toward autonomous systems that can learn, plan, and act with minimal human supervision, a milestone in Meta’s broader push toward advanced machine intelligence.

What Is V-JEPA 2?

While robotics has made impressive strides in controlled environments, robots still tend to fall short in dynamic, real-world scenarios they haven’t been specifically trained for. Whether in manufacturing, logistics, or home automation, unpredictable settings have long been a pain point. Meta’s V-JEPA 2 directly addresses that issue.

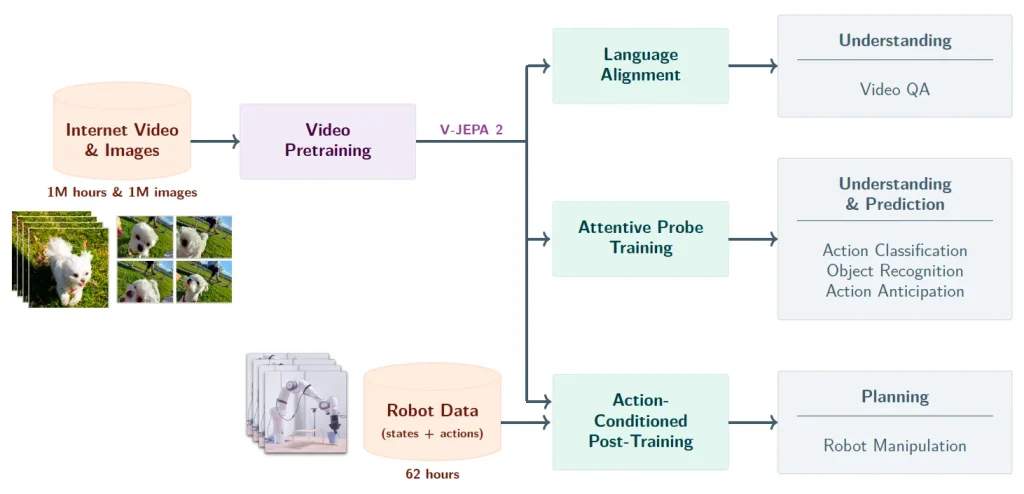

At its core, V-JEPA 2, short for Video Joint Embedding Predictive Architecture 2, is trained to recognize what it sees and predict what’s likely to happen next. The model operates on over one million hours of video and one million diverse images, allowing it to absorb nuanced knowledge about motion, interaction, and cause-effect relationships in physical spaces. This makes V-JEPA 2 particularly well-suited for tasks like robotic grasping.

This model is designed to process video data, extract temporal and navigation information, object placement, and even understand subtle human-object interactions, without prior exposure to the exact situation. For instance, if a robot sees a door handle, V-JEPA 2 helps it reason how that object might move, even if it’s never seen that specific door before.

How It Works

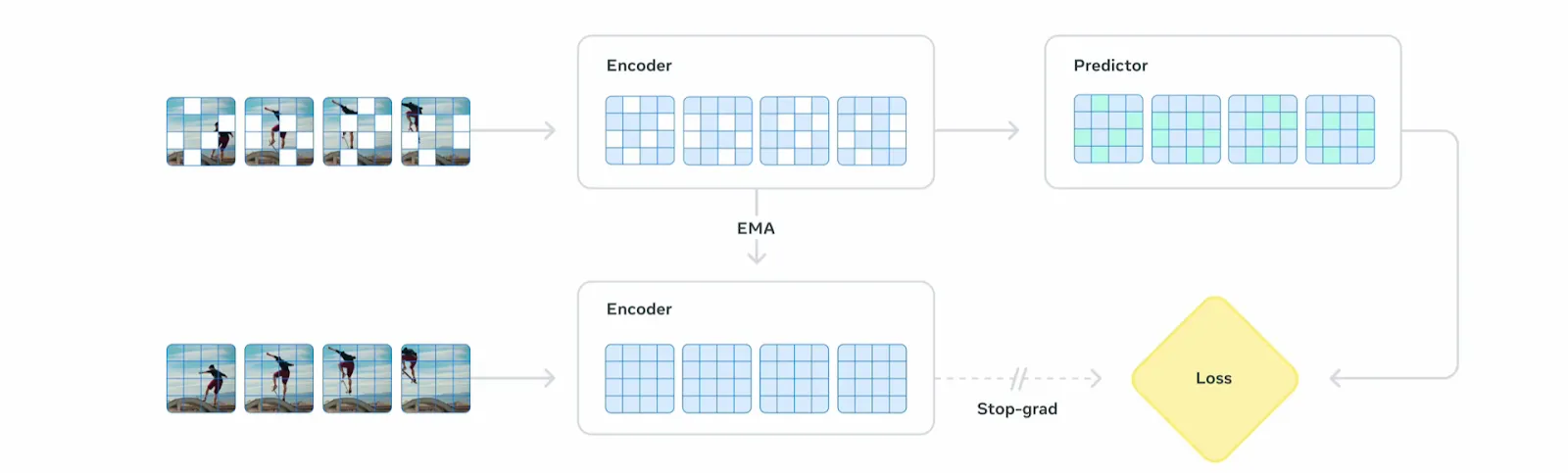

V-JEPA 2 consists of two core components:

- An encoder that transforms raw video into rich, compact representations (embeddings)

- A predictor that uses those embeddings to forecast future outcomes and guide decision-making

V-JEPA 2 learns via self-supervised learning, identifying patterns and logic in the world by simply watching how objects move and interact. This shift in training philosophy makes it leaner, faster, and more generalizable than past models.

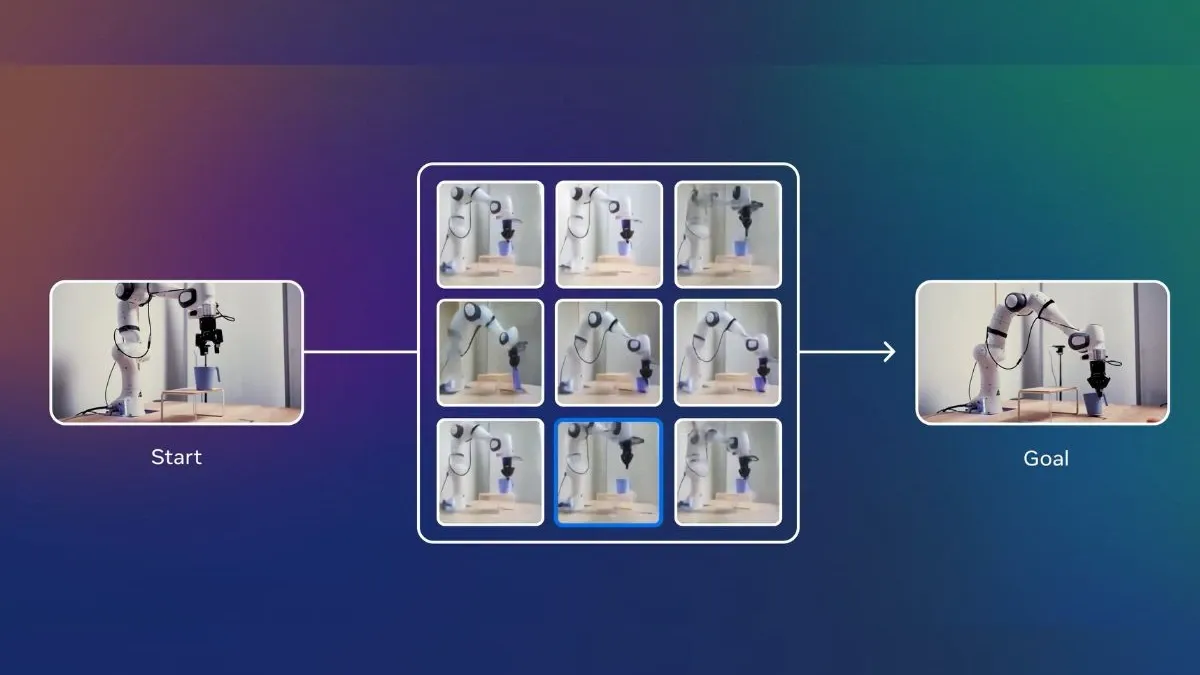

High Performance on Real-World Tasks

Meta reports that V-JEPA 2 achieved 65% to 80% success rates in robotic pick-and-place tasks, demonstrating real-world utility in object manipulation, a foundational skill for many types of robots. It also excels in video-based question answering and can anticipate actions up to one second into the future, giving it a time-sensitive edge.

In robotics, this level of temporal and spatial reasoning is essential. According to Wyatt Mayham, lead AI consultant at Northwest AI Consulting, “The core challenge in robotics has always been dealing with unstructured environments. V-JEPA 2 represents a genuine step toward solving that.”

Broader Implications

Meta positions V-JEPA 2 potential use cases in:

- Manufacturing automation

- Warehouse and in-building logistics

- Surveillance and situational awareness

- AI agent simulation and training

The architecture is particularly exciting in its ability to power low-supervision, agentic AI systems that can adapt on the fly and even evolve their strategies over time. This unlocks the potential for truly general-purpose robots that don’t just react but intelligently plan in unknown environments.

A Step Toward Embodied AI

Meta’s research is part of a larger trend known as embodied AI, where the goal is to build agents that perceive, reason, and act in the world as humans do. The ability to generalize across unknown situations is central to this vision, and V-JEPA 2 is a significant milestone.

The implications are vast. Imagine home robots that can clean without being told what’s out of place, or delivery drones that reroute on the fly when conditions change. V-JEPA 2 may not be the final answer, but it’s a substantial leap in that direction.

Ankit Chopra, director at Neo4j, put it succinctly: “This is a quiet but significant moment in AI development. V-JEPA 2 moves us beyond traditional perception models, toward machines that understand and respond to the world like intelligent agents.”

With V-JEPA 2, Meta is pushing the boundaries of what’s possible in AI and robotics. As we move closer to a future where AI agents operate independently in human environments, V-JEPA 2 stands out as one of the most important contributions of 2025 to the field of autonomous robotics.

Leave a comment